Evaluation of sensors as input devices in computer music interfaces

| Participants: |

Mark T. Marshall Marcelo M. Wanderley (supervisor) |

|

|---|---|---|

| Funding: |

|

|

| Project Type: |

|

|

| Time Period: | (Completed.) |

This project is aimed at the evaluation of input devices for musical expression. One axis of research in this direction deals with the study of existing sensor devices as the primary means of interaction in interfaces for computer music. It involves the examination of the relationships between types of sensors, specific musical functions and forms of feedback, based on the initial work by Vertegaal and colleagues (see references below). This research involves a number of steps, including:

- Survey of sensors currently in use in computer musical interfaces

- Classification of sensors based on the form of input sensed

- Definition of specific musical tasks for evaluation of the sensors

- Evaluation of the sensors to determine any mappings between sensor type and task type

- Examination of the effects of additional feedback on sensor-task mappings

Survey of sensors currently in use in computer musical interfaces

The first step in the evaluation process was to determine which sensors are currently being used in computer music interfaces. To facilitate this a survey was made of all of the papers and posters presented at the New Interfaces for Musical Expression (NIME) conferences from 2000 to 2004 inclusive. This resulted in a total of over 130 instruments and interfaces being surveyed. From this a list was compiled of the most commonly used sensors in these interfaces. A number of these sensors were then classified and used in the evaluation process. Classification of sensors based on the form of input sensed

Previous work has attempted to show a link between certain sensors and certain musical tasks[1]. This work classified sensors based on the form of input which they sensed (i.e. linear position, rotary position, isometric force etc.). It was decided to follow a modified version of the classification scheme that had been used in this work. This results in the following classes of sensors:

- linear position

- rotary position

- isometric force

- isotonic force

- velocity

The sensors which were found in the survey were then classified using this scheme.

Definition of specific musical tasks for evaluation of the sensors

As well as offering a classification scheme for sensors, [1] has also offered a classification scheme for musical tasks which is a simple three-tiered system. The classification proposed was:

- Absolute dynamic

- Relative dynamic

- Static

An example of using this classification for simple musical tasks is offered in [2]. Using these tasks and classifications, a set of tasks was defined which would be used to evaluate the sensors. The set of tasks included 2 simple tasks and 1 complex task, which involved performing the simple tasks simultaneously. These tasks were chose to also represent basic tasks which commonly take place in a musical performance. The tasks chosen and there classificaion is as follows:

| Task | Classification |

|---|---|

| Note selection | Absolute dynamic |

| Vibrato | Relative dynamic |

Evaluation of the sensors to determine any mappings between sensor type and task type

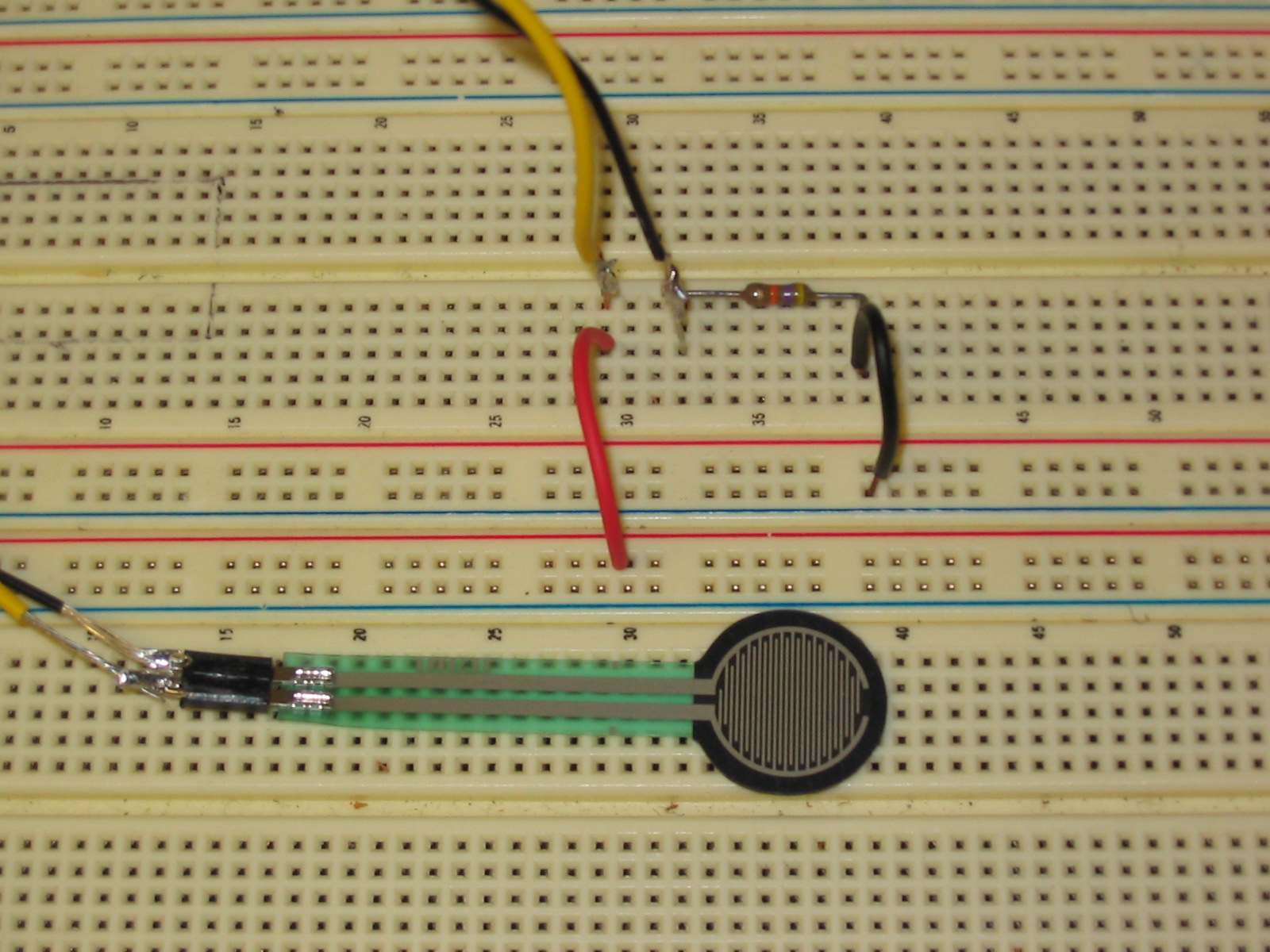

The evaluation of the sensors was performed through a user study, in which a number of users were asked to perform all three tasks with a selection of sensors. As performing the tasks with all available sensors would be too time consuming, a representative group of sensors was chosen. This group consisted of the 6 most commonly used sensors as discovered in the survey. The sensors used (and their classifications) were as follows:

| Sensor | Classification |

|---|---|

| Linear potentiometer (fader) | Linear position |

| Rotary potentiometer | Rotary position |

| Linear position sensor (ribbon controller) | Linear position |

| Accelerometer | Velocity |

| Force sensing resistor | Isometric force |

| Bend Sensor | Rotary position |

The evaluation procedure involved using each sensor to control the pitch of a synthesis system. 12 semitones of a major scale were mapped over the dynamic range of the sensor. The note to be played was selected by manipulating the sensor to get the desired pitch using the primary hand and activating a key which caused the note to be output with the secondary hand.

The setup for the system involved the sensor and the key connected to a USB-based analog-to-digital converter, which operates with a resolution of 10-bits and a sample rate of 100Hz. Data captured from the sensor and the key was input to Max/MSP running on a Apple Mac G5. The synthesis system was a simple wave-shaping synthesis, with only the pitch and the note activation controlled by the user.

A number of users, all with musical experience, most with computer music experience, attempted each of the three tasks with each of the controllers. Data from the evaluation was captured in a number of ways. Firstly, the time taken to succesfully perform the task with each sensor was measured. Secondly, the sound output from the system was recorded to allow for an examination of the quality of the output. Finally, the users were asked after completion of each task to order the sensors by suitability for the task. The combination of these methods allows for an examination of the learnability and ease of use of the sensor and the attitude of the user to the sensor. These parameters form a subset of the parameters proposed in [3] as the necessary parameters for the evaluation of the usability of a system. They are also similar to those suggested parameters for the evaluation of interfaces for computer music in [4].

Preliminary Results

User's have shown a strong consistency in the preference ratings which they attach to the sensors. This would seem to indicate that a mapping does exist from sensor types to task types. In the note-selection task all users showed a preference for a position sensor. Of these, 83% showed a first preference for a linear position sensor and second preference for a rotary position sensor. The remaining participants showed an inverse of this preference. For the note modulation task all of the users indicated a first preference for a force sensor.

The remaining data is being analysed to give indications of learning time and quality of output and will be available shortly. Examination of the effects of additional feedback on sensor-task mappings

Further phases of the experiments are also in progress. In order to evaluate the effects of additional feedback on the usability of the sensors for the tasks, participants are being asked to repeat the experiment with the addition of either audio or visual feedback to the procedure.

In the case of audio feedback, the note production is no longer controlled by the secondary hand using the key, but is instead constant. This means that a note is constantly being played, the pitch of which is controlled by manipulating the sensor with the primary hand.

For the visual feedback, a graphical representation of the scale has been created, in the form of a line of empty connected boxes. The current note is indicated by the filling of it's representative box with a solid red colour. Note production is again controlled by the secondary hand, using a key.

The addition of feedback has proven to make tasks more easily achievable, while still maintaining a perceived difference in usability between the sensors. The usefulness of either form of feedback seems to be highly task dependant, with the note selection task benefiting most from visual feedback and the note modulation task benefitting most from the constant audio feedback.

It has also been noted that the ordering of the sensors by the users changes with the addition of feedback, indicating that certain sensors benefit more from the additional feedback than others. Conclusions and Further Work

This project has aimed at discovering the existence of any mappings between specific sensors, tasks and feedback within interfaces for computer musical instruments. Preliminary results have indicated that such mappings do seem to exist, based on the consistancy of user judgements of the use of sensors for specific tasks.

What remains is to attempt to determine these mappings from the available experimental data which is available and to derive guidelines which might be used in the design of computer musical instruments.

References

- R. Vertegaal, T. Ungvary and M. Kieslinger, “Towards a Musician's Cockpit: Transducers, Feedback and Musical Function”, in Proc. of ICMC1996, Hong Kong, 1996.

- M. M. Wanderley, J. Viollet, F. Isart and X. Rodet, "On the Choice of Transducer Technologies for Specific Musical Functions", in Proc. of ICMC2000, Berlin, Germany, 2000.

- B. Shackel, “Human factors and usability”, in J. Preece and L. Keller (eds.), “Human-Computer interaction: selected readings”, 1990.

- N. Orio, N. Schnell and M. M. Wanderley, "Input Devices for Musical Expression: Borrowing Tools from HCI", presented at NIME workshop during ACM CHI2001, Seattle, USA, 2001.