IDMIL News!

Marcelo Wanderley awarded a Francqui Chair at the University of Mons

Prof. Marcelo Wanderley was awarded a Francqui Chair at the University of Mons, Belgium, by the Francqui Foundation.

He will present 5 lessons in October 2017 at the Numédiart Institute in Mons, when he will discuss several aspects of multidisciplinary research on music, science and technology.

Lesson Schedule:

- Inaugural lecture, October 5: “Easier Said than Done”- On more than Two Decades of Applied Multidisciplinary Research Across Engineering, Science and Music

- October 6: Human-Computer Interaction and Computer Music: Similarities, Differences and Opportunities for Cross-Fertilization

- October 10: Digital Musical Instruments: Design of and Performance with New Interfaces for Musical Expression

- October 11: Analysis of Performer Movements: Techniques and Applications

- October 12: Creating Large-Scale Infrastructures for Multidisciplinary Research in Music Media and Technology: The Case of the Centre for Interdisciplinary Research in Music Media and Technology, CIRMMT/McGill University

Best Paper Award at HCII 2017

Ian Hattwick, Ivan Franco and Marcelo Wanderley win Best Paper Award at 2017 HCI Conference

HCI International Conference recently announced that the paper “The Vibropixels: a Scalable Wireless Tactile Display System” has received the Best Paper Award of the Human Interface and the Management of Information Thematic Area of the 2017 Human-Computer International Conference in Vancouver, BC. The paper presents a brief overview of the VibroPixel tactile display system and discusses ways in which the system was created to facilitate its use in the artistic creation process.

Call for Abstracts - CIRMMT Symposium on Force Feedback and Music

The Centre for Interdisciplinary Research in Music Media and Technology (CIRMMT) and the Input Devices and Music Interaction Laboratory (IDMIL), McGill University, will organize a 2-day workshop on December 9-10, 2016, to discuss the state-of-the-art and the possible future directions of research on force-feedback and music (FF&M).

We would like to invite submissions of research reports (abstracts) to be presented during the event.

Summary of Important Information:

- CIRMMT Symposium on Force Feedback and Music

- When: December 9 – 10, 2016

- Where: CIRMMT, McGill University, Montreal, Qc, Canada (www.cirmmt.org)

- Deadline for abstract submission: Monday November 14 16 2016

- Acceptance notification: Thursday November 17 2016

Context: Though haptics research in music is a very active research field, it seems presently dominated by tactile interfaces, due in part to the widespread availability of vibrotactile feedback in portable devices. Though not recent—with some of the its early contributions dating back to the end of the 70s—research on force-feedback in musical applications has traditionally suffered from issues such as hardware cost, as well as the lack of community-wide accessibility to software and hardware platforms for prototyping musical applications. In spite of this situation, in recent years, several works have addressed this topic proposing software platforms and simulation models.

We believe that it is then time to probe the current state of research on Force-feedback and music (FF&M).

Topics: We invite the submission of abstracts summarizing innovative work addressing force-feedback applications to music and media, including, but not limited to, the following topics:

- Music and media applications of force-feedback systems

- FF models of musical actions

- Audio and mapping systems adapted to force-feedback applications

- Open-hardware and open-source solutions for developing and sharing force-feedback/musical projects

- Evaluation of system quality vs cost in musical applications

- The pros/cons of existing hardware and software systems

- The obsolescence of hardware and software systems and ways to deal with it

- Perceptual issues in FF&M

Abstract submission: To propose a contribution, please send a one page summary of your proposal detailing:

- the research you carry out

- its current results

- its future perspectives

Deadlines and how to submit: The deadline for abstract submission is Monday November 14 2016. Selection results will be announced on Thursday Nov 17.

Please send your one-page abstract to reception@cirmmt.mcgill.ca, with the email subject: [FF&M 2016] Contribution by “XXX”

Support: The CIRMMT Symposium on Force Feedback and Music is sponsored by the Input Devices and Music Interaction Laboratory, McGill University, the Inria-FRQNT MIDWAY “équipes de recherche associées” project, and the Centre for Interdisciplinary Research in Music Media and Technology and McGill University.

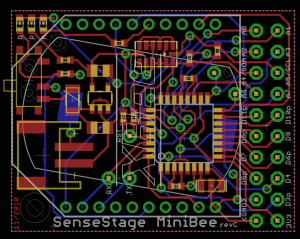

Introducing the MiniBee

The MiniBee is a small, arduino-like circuit board designed for the SenseStage project, a collaboration between the IDMIL and Chris Salter's lab at Concordia University. The boards include the footprint for a XBee mesh-networking wireless transceiver, creating compact, low-cost sensing nodes which we are now using for investigating ubiquitous computing in the media arts.

The MiniBee is a small, arduino-like circuit board designed for the SenseStage project, a collaboration between the IDMIL and Chris Salter's lab at Concordia University. The boards include the footprint for a XBee mesh-networking wireless transceiver, creating compact, low-cost sensing nodes which we are now using for investigating ubiquitous computing in the media arts.

The first batch of 100 MiniBee Rev.A boards have been manufactured, assembled and successfully tested ![]()

Different Strokes Video

Different Strokes is a software system for performing music on a computer. It aims to allow a performer to create much of his or her performance sequences on-stage instead of relying on prepared control material.

Concert Experimental Music from Brazil and Beyond

Video has now been posted from the concert “Experimental Music from Brazil and Beyond” at the University of Lethbridge. The concert featured two performances with T-Stick: a solo written by Patrick Hart and D. Andrew Stewart, and a trio improvisation by Fernando Rocha (percussion, including the Hyper-Kalimba), Elise Pittenger (cello) and D. Andrew Stewart (soprano T-Stick).

CIRMMT's Workshop on Motion Capture for Music Performance, October 30-31, 2006

- More information at the workshop webpage

- Some photos of the event (see all photos):

Workshop on MoCap Data Exchange

The Workshop on MoCap Data Exchange and the Establishment of a preliminary Database of Music Performances (MoCap and Video) will take place during the SMPC 2007 Conference.

Organizers: Isabelle Cossette (CIRMMT, McGill University), Marcelo M. Wanderley

Time: July 30 2007, 9h30am to 4pm.

Location: New Music Building, Room A-832, McGill University, 555 Sherbrooke Street West, Montreal

Video featuring fMRI-compatible musical instruments

The development of an fMRI compatible cello (Avrum Hollinger's Ph.D. thesis) was featured recently in a video shown at Canal Savoir and referenced in Wired Magazine. These instruments are used by neuroscientists and psychologists to study the brains of musicians while performing their instruments.

Upcoming T-Stick Concert

T-Stick Music

Wednesday, May 4, 8pm

ISSUE PROJECT ROOM

The (OA) Can Factory

232 3rd Street

3rd Floor

Brooklyn, NY

Admission: $10 / $8 for members

Five artists come together to mark a unique moment in the development of digital instrumentalities and music. The T-Stick digital musical instrument, built in 2006, will take center stage in the world premieres of five new works, among the first music to be formally created for this instrument. D. Andrew Stewart, the leading expert in t-stick performance, has invited five American and Canadian composers to collaborate in the development of repertoire for the t-stick. He is attempting to establish a tradition and approach to creating music with electronic musical instruments. The five premieres, which are the result of collaborations between Stewart and the composers of the 2010 T-Stick Composition Workshops, will be performed along with established t-stick music, as well as improvisations combing digital and acoustic instrumentalists

Upcoming performances by McGill Digital Orchestra

The McGill Digital Orchestra will premiere two new works for digital musical instruments on Wednesday, March 5 in Pollack Hall, McGill University, Montréal. The works, The Long And The Short Of It by Heather Hindman, and sounds between our minds by D. Andrew Stewart, will be performed as part of the MusiMars/MusiMarch contemporary music festival. Both pieces were supported by CIRMMT Director's Interdisciplinary Excellence Prizes.

The McGill Digital Orchestra will premiere two new works for digital musical instruments on Wednesday, March 5 in Pollack Hall, McGill University, Montréal. The works, The Long And The Short Of It by Heather Hindman, and sounds between our minds by D. Andrew Stewart, will be performed as part of the MusiMars/MusiMarch contemporary music festival. Both pieces were supported by CIRMMT Director's Interdisciplinary Excellence Prizes.

The two pieces will be performed by the same ensemble, consisting of cellist Erika Donald, pianist Xenia Pestova, and percussionist Fernando Rocha; however the ensemble will primarily by playing new digital musical instruments developed and refined for the project.

The night before, Sean Ferguson's Ex Asperis will also be premiered as part of the festival. Developed using resources from both the digital orchestra project and research on Compositional Applications of Auditory Scene Synthesis in Concert Spaces Via Gestural Control, the ensemble will consist of 12 players, solo cello, and live gesture-based spatialization. The cello and spatial effects will be performed by digital orchestra members Chloé Dominguez and Fernando Rocha, respectively.

The McGill Reporter ran a short article about the project and upcoming performance.

Unsounding Objects Premier

The premiere of Unsounding Objects was February 7, 2013 in the Live@CIRMMT New Instruments concert. A Montreal Gazette article about the concert features Unsounding Objects.

Here is a video of the composition:

Call for Submissions - CMJ Special Issue “Advances in the Design of Mapping for Computer Music”

Call for Submissions - CMJ Special Issue: “Advances in the Design of Mapping for Computer Music” Guest Editors: Marcelo M. Wanderley and Joseph Malloch

When we use digital tools for making music, the properties and parameters of both sound synthesizers and human interfaces have an abstract representation. One consequence of the digital nature of these signals and states is that gesture and action are completely separable from sound production, and must be artificially associated by the system designer in a process commonly called mapping.

The importance of mapping in digital musical instruments has been studied since the early 1990s, with several works discussing the role of mapping and many related concepts. Since roughly the mid-2000s, several tools have been proposed to facilitate the implementation of mappings, drastically reducing the necessary technical knowledge and allowing a large community to easily implement their ideas. Coupled with the availability of inexpensive sensors and hardware, as well as the emergence of a strong Do-It-Yourself community, the time seems right to discuss the main directions for research on mapping in digital musical instruments and interactive systems.

This call for submissions for a special issue of the Computer Music Journal focuses on recent developments and future prospects of mapping.

Relevant topics include, but are not limited to:

- Mapping in instrument/installation/interaction design

- Mapping concepts and approaches

- Mapping tools

- Evaluation methodologies

- Mapping in/as composition

- Mapping for media other than, or in addition to, sound

Deadline for paper submission is March 15, 2013. The issue will appear in 2014. Submissions should follow all CMJ author guidelines (http://www.mitpressjournals.org/page/sub/comj). Submissions and queries should be addressed to marcelo.wanderley@mcgill.ca, with the subject starting with [CMJ Mapping]

TIEM database online

The online database for the Taxonomy of Realtime Interfaces for Electronic Music Performance project is now online. It currently includes information on 45 interfaces and instruments submitted to their online survey. From the website:

We are interested in exploring the practice and application of new interfaces for real-time electronic music performance. This research is part of an Australian Research Council Linkage project titled “Performance Practice in New Interfaces for Realtime Electronic Music Performance”.

This research is being carried out at VIPRE MARCS Auditory Laboratories, the University of Western Sydney in partnership with EMF, Infusion Systems and The Input Devices and Music Interaction Laboratory (IDMIL) at McGill University.

The McGill Reporter features IDMIL

Check the article about some of the research from the IDMIL on the McGill Reporter edition of May 17, 2007.

An excerpt:

At McGill, researchers study the significance of how performers move while they play a musical instrument. Using this information, Marcelo Wanderley, of the Schulich School of Music creates innovative digital musical instruments, such as the gyrotyre, that are designed to enhance the experience for both the audience and the performer.

Professor Wanderley analyzes the relationships between movements of performers and the sounds they produce. His team of graduate students uses 3D infra-red motion capture systems to identify patterns of musical notes and correlate these with the motions of the performer. Marcelo found that musicians always move their bodies in the same patterns when they play the same piece of music, even after a nine-month interval.

Tech showcase at SERI Montreal

SERI Montreal will take place on Monday 1st of May, 2016. This year theme is “La mobilité, du véhicule à la molécule” (Mobility, from vehicle to molecule“). The purpose of this event is to give a visibility to the researchers from all Montreal universities. Approximatively 250 companies will participate, and it is the opportunity to present what IDMIL lab is doing in Mobility field (in the large sense). One third of the companies are related to Big data, virtual reality, medical devices, etc (see a video on their youtube channel).

IDMIL researchers Ian Hattwick and Baptiste Caramiaux will attend the event and demonstrate some of the latest movement and music technologies: from realtime movement analysis to hardware controllers.