OM-Pursuit: Dictionary-Based Sound Modeling

| Participants: |

Marlon Schumacher Graham Boyes (collaborator) |

|

|---|---|---|

| Funding: |

CIRMMT student award 2011/2012 FQRNT DED scholarship CIRMMT Director's Interdisciplinary Excellence Prize |

|

| License: | GPL | |

| Time Period: | 2011–present (ongoing) |

Overview

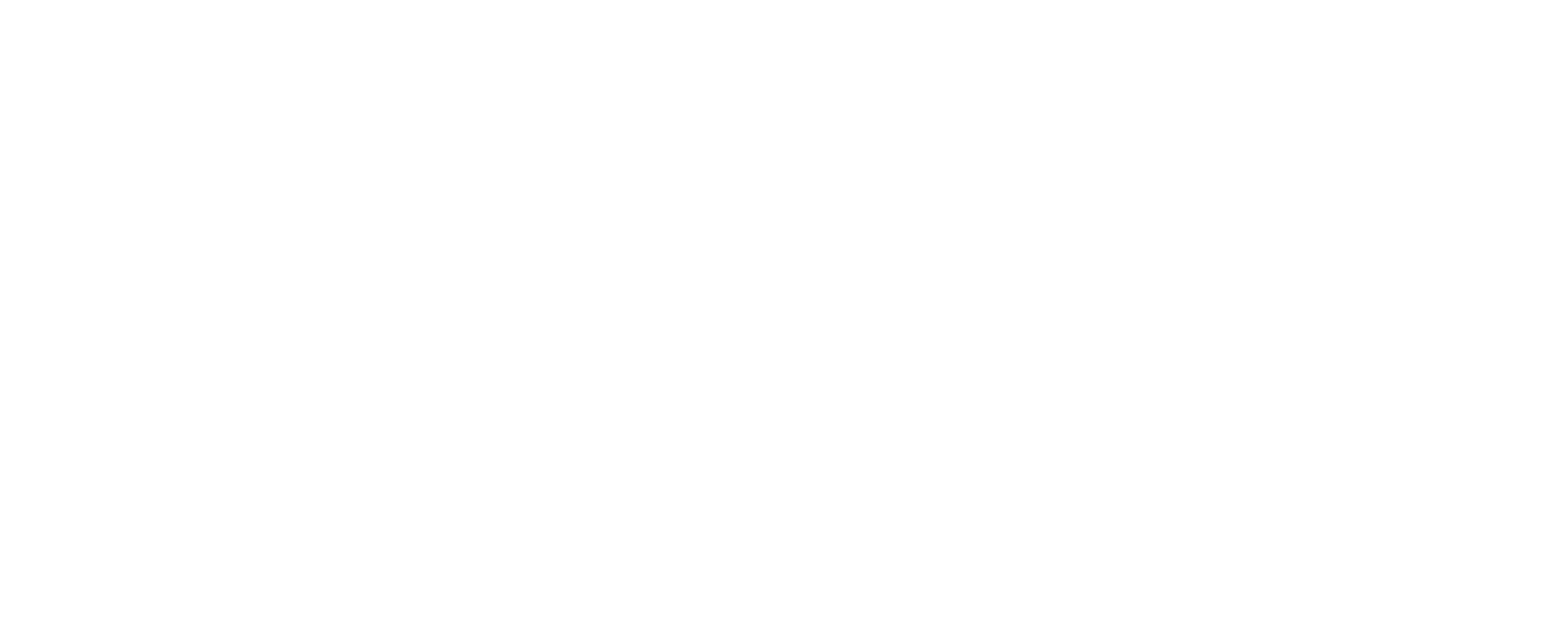

OM-Pursuit is a library for corpus-based sound modelling in OpenMusic.

Parametric sound representations have since long served as conceptual models in composition contexts (see e.g. the French spectralist school). Today there are a number of software tools allowing composers to derive symbolic data from continuous sound phenomena, such as extracting frequency structures via sinusoidal partial tracking. Most of these tools, however, are based on the Fourier transform -which decomposes (static) frames of a time-domain signal into sinusoidal (frequency) components. This method can be less adequate to faithfully represent non-stationary sounds such as noise and transients, for example. Dictionary-based methods offer a different model, representing sound as a linear combination of individual atoms stored in a dictionary. Mostly used for sparse representation of signals for audio coding purposes (compression, transmission, etc), this atomic model offers interesting new possibilities for computer-aided composition applications, such as algorithmic transcription, orchestration, and sound synthesis.

Using sound samples as atoms for the dictionary we can design the 'timbral vocabulary' of an analysis/synthesis system. The dictionaries used to analyze a given audio signal can be built from arbitrary collections of sounds, such as instrumental samples, synthesized sounds, recordings, etc. OM-Pursuit uses an adapted matching pursuit algorithm (see pydbm by G.Boyes), to iteratively approximate a given sound using a combination of the samples in the dictionary -in a way comparable to photo-mosaicing techniques in the visual domain.

Communication between OpenMusic and the dsp-kernel (pydbm-executable) is handled via an SDIF interface and scripts which are generated and manipulated using the visual programming tools in the computer-aided composition environment. The decomposition of a target sound results in a sound file (the model), the residual (difference between target and model), and a parametric description of the model. We developed a number of tools for visualization and editing of this model using different representations (spatial, tabulated, temporal). The model-data can be used and manipulated within OpenMusic in a variety of contexts, from the control sound spatialization and synthesis processes, for computer-aided orchestration and -transcription, to graphical notation of musical audio.

Applications

Atomic Decomposition offers interesting possibilities for musical applications:

- Iterative Approximation. Control over degree/resolution of the model

- Approximation is based directly on the signal rather than signal descriptors

- Dictionaries can be arbitrarily designed and combined (sample libraries, field-recordings, etc.)

- Model is naturally polyphonic (juxtaposition and superposition of sound grains)

- Constrained Matching Pursuit (constraint-based control over distribution of sound grains)

- Process leaves a “residual” - Multiple iterative decompositions (serial, parallel) of the same target are possible, e.g. for different orchestrations

Interesting applications are possible in combination with OMPrisma (dictionary-based spatialization), and OM-SoX (batch-processing for designing dictionaries, e.g. transpositions, filterings, etc.).

OM-Pursuit was awarded the CIRMMT Director's Interdisciplinary Excellence Prize.

In a recent project we have employed OM-Pursuit for the notation of musical audio:

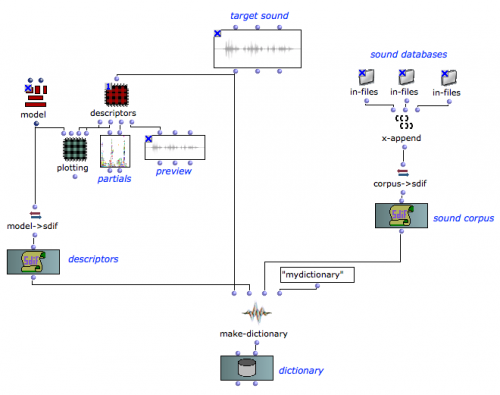

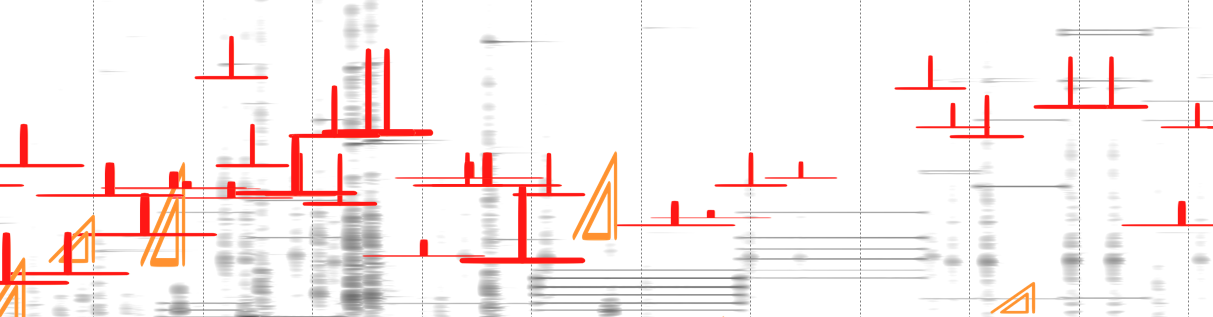

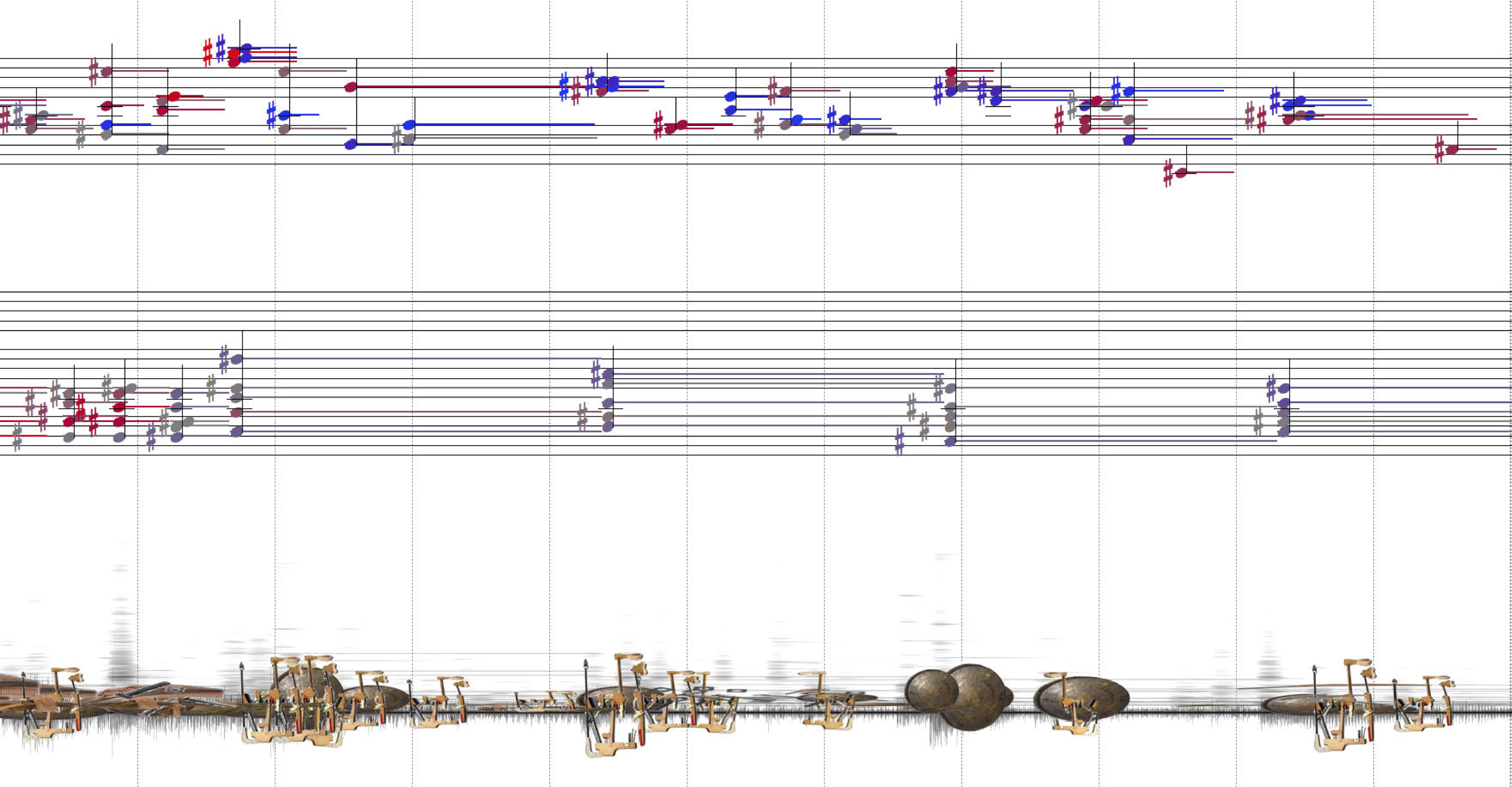

The notation of musical audio (such as a tape-part in electroacoustic music) is typified by two extremes on a continuum: On the one end are signal-based notations, such as sonograms, waveforms, etc. -which however don’t provide information about musical semantics. On the other end are symbolic notations, such as icons, characters, etc. -which in turn don’t provide information about the sonic details. Both aspects, however, are important for the interpretation of a musical work, in particular for contemporary music, which does not rely on common musical idioms. Using dictionary-based approaches (OM-Pursuit) an audio signal can be analyzed / transcribed based on a user-defined collection of smaller-scale sound files (so-called ‘atoms’). The individual atoms can be associated with arbitrary visual representations (symbolic, iconic, etc.). The user can then design simple programs to algorithmically arrange these visual elements on a 2D-canvas into a kind of cartographic map of the higher-level sonic content in the signal. Below are some screenshots from early experiments with the system. There's also one from my piece for 2 pianists and tape “6 Fragments on 5’05” - 1 Act of cleaning a piano”.

This work was presented at the 1st Symposium on Music Notation at Université IV, Paris Sorbonne, organized by AFIM/MINT-OMF workgroup Les nouveaux espaces de la notation musicale.

Sound Examples

- See the set on soundcloud for some audio examples.

Downloads

Artistic Works

OM-Pursuit has been used for the composition of a number of works, most notably:

- “Spielraum” for Violin, Cello, 2 dancers and New Digital Musical Instruments (Marlon Schumacher, 2013).

Commissioned for the research-creation project "Les Gestes". Premiered at Agora de la danse, Montreal, Canada, March 2013.

- “Spin” for extended V-Drums, Interactive Video and Live-Electronics (Marlon Schumacher, 2012).

Commissioned by Codes D'Acces. Premiered at the "Prisma et Les Messagers" concert, Usine-C, Montreal, Canada, April 2012.

- “Ab-Tasten” for Disklavier, 4 Virtual Pianos and Sound Synthesis (Marlon Schumacher, 2011).

Commissioned by CIRMMT. Premiered at the live@CIRMMT concert Clavisphere, Montreal, Canada, 2011.

- Binaural Live-Recording McGill MultiMedia Room, April 14, 2011.