WYSIWYG - Wearable Sounds, Gestural Instrument

| Participants: |

Marcelo M. Wanderley (co-PI) David Birnbaum Rodolphe Koehly Elliot Sinyor |

Doug Van Nort Joseph Malloch |

|

|---|---|---|---|

| Collaborators: |

Sha Xin Wei (Concordia University, co-PI) Freida Abtan (Concordia University) David Gauthier (Concordia University) |

|

|

| Funding: | Hexagram grant (2006-2007) | ||

| Project Type: | Collaborative Project | ||

| Time Period: | Sept. 2006–Sept. 2007. (Completed.) | ||

Project Description

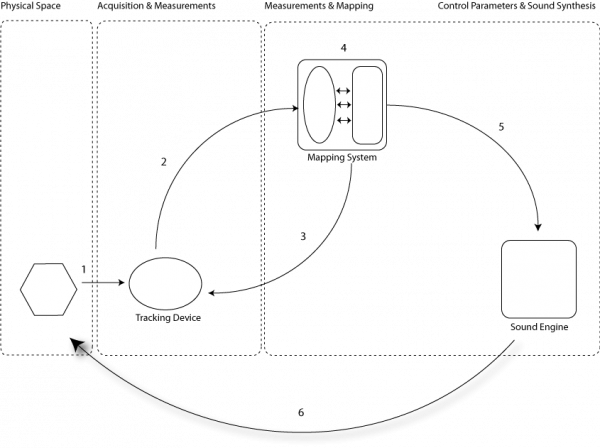

WYSIWYG (Wearable Sounds Gestural Instruments) aims to create a suite of cloth-based controllers that transform freehand gestures into sounds. These wearable, soft instruments can also be embedded into furnishings, rooms, or used as props in improvised play, responding with sound to diverse parameters such as proximity, movement, and history of activity. The interactions are designed in the spirit of games like hide-and-seek, blind-man’s buff, and simon-says, which work well with a variable number of players in live, ad hoc, multiplayer events as well as rehearsed performances.

The WYSIWYG project includes several research directions: the gestural and social context, the physical cloth controller, and software for feature extraction, mapping, and sound synthesis. Sound expression in this context de-emphasizes cognitive models of “instruments” in favor of leveraging socially-embedded expectations of handkerchiefs, scarves, blankets, and tapestries.